Beyond the Numbers

Understanding Effectiveness Through Research & Metrics

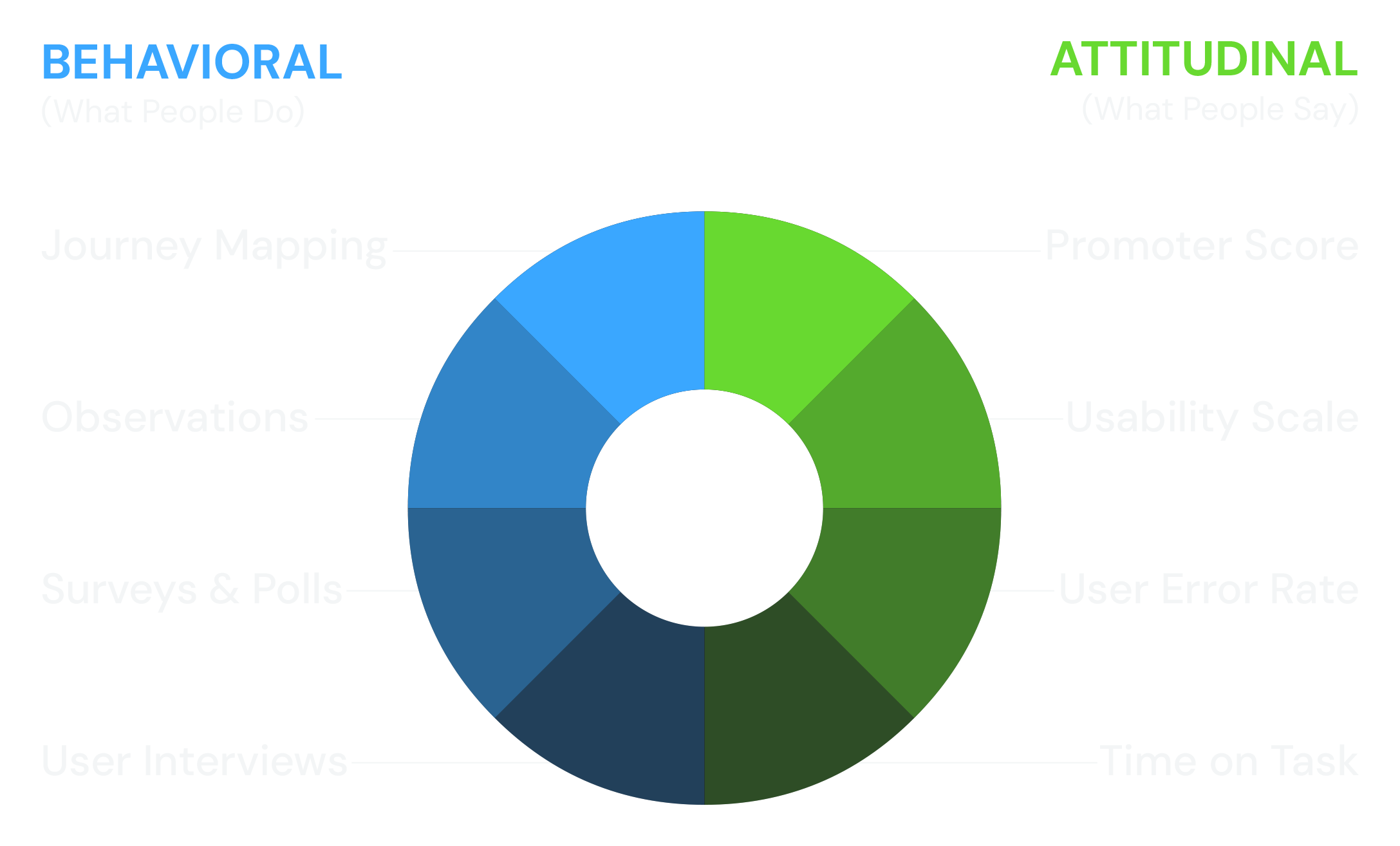

Success in design is not just about what works, it's about understanding why it works. While analytics and behavioral data provide valuable insights into user interactions, they do not always tell the full story. A high drop-off rate might indicate a usability issue, but without qualitative research, the underlying cause remains unclear. That is why I combine quantitative metrics like task success rates, A/B testing, and user session analytics with qualitative insights from user interviews, surveys, and usability testing. Together, these approaches uncover hidden friction points, validate design decisions, and guide meaningful improvements.

However, research is only as valuable as the actions it drives. Gathering data is the first step, but the real impact comes from translating findings into solutions. Whether it is refining an interaction flow based on session recordings or rethinking an entire feature due to recurring user feedback, I ensure research informs practical design decisions. In this article, I break down how I measure success, integrate research into the design process, and use data-driven strategies to enhance usability and drive business outcomes.

Qualitative Research & Usability Testing

To gain a complete picture of user behavior, I use a mix of research methods.

Common Methods

- User Interviews – Talking directly with users to uncover frustrations, motivations, and unmet needs.

- Onsite & Virtual Observations – Visiting users in their work environments or conducting remote usability sessions to see real-world behaviors.

- Surveys & Polls – Gathering structured feedback to complement behavioral insights.

- Journey Mapping – Understanding how users interact with the product at every touchpoint.

Research to Action: Turning Feedback into Solutions

User research is only valuable if it leads to real improvements.

- Identify patterns and trends from qualitative feedback.

- Cross-reference qualitative insights with behavioral data to uncover deeper trends.

- Prioritize issues based on impact, feasibility, and business alignment.

- Integrate with product and engineering teams to implement and test improvements.